Learning of Continuous and Piecewise-linear Functions by the Multigrid Algorithm

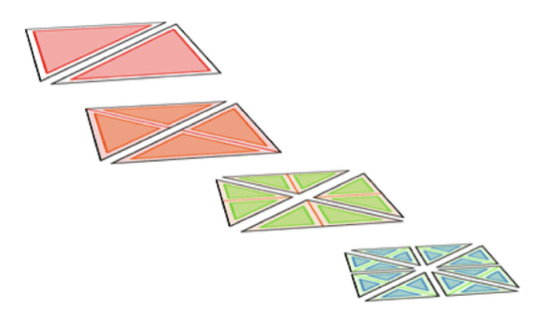

ReLU neural networks generate continuous and piecewise-linear (CPWL) mappings. Some other methods rely on learning CPWL functions with local parameterizations in low-dimensional cases. In these methods, a regularization with sparsity effects is used to promote simpler functions. Such methods are interpretable, and their performance is comparable with ReLU networks. Their computational bottleneck is solving the optimization problem of learning. In this project, we focus on learning CPWL functions in two-dimensional cases. We use box splines (some piecewise linear polynomials) on a grid for parameterizing the functions. We will have multiple grids with different grid sizes. First, we solve the optimization problem on coarser grids and sample it on finer ones. We continue learning on the finer grids to get better results. Our goal is to obtain a solution with the same loss faster than the one obtained by solving on one fine grid. The student should be familiar with the basics of optimization and notions like sparsity. In addition, Python programming is required.

Having a look at this paper is helpful: 10.1109/OJSP.2021.3136488

The photo is from the paper: https://onlinelibrary.wiley.com/doi/10.1111/j.1467-8659.2011.01853.x.

- Supervisors

- Mehrsa Pourya, mehrsa.pourya@epfl.ch

- Michael Unser, michael.unser@epfl.ch