Deep neural networks: learning with splines

Spring 2017

Master Semester Project

Master Diploma

Project: 00326

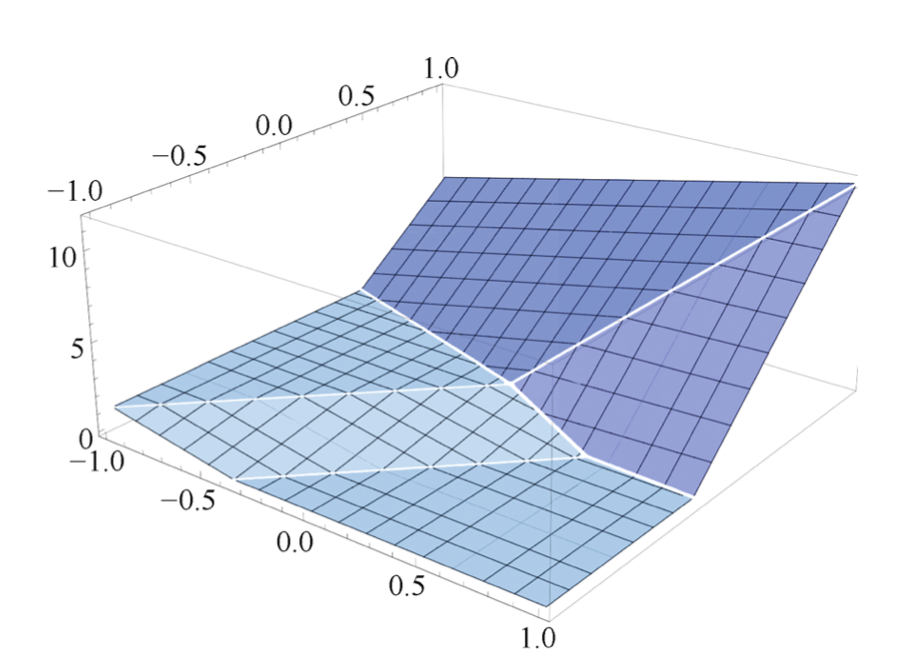

A recent paper (Poggio et al, 2015) points out that deep neural networks with RELU activation functions can be interpreted as hierarchical splines. The purpose of this project is to exploit this connection in order to gain further understanding and to improve the performance of such networks. Following a formal statement of the problem, the project will consist in an extensive (but informed) experimental comparison of different network configurations in order to determine the most promising one. The idea is to keep the number of parameter fixed (total number of RELUs and linear weights) and to investigate the effect of the architectures-in particular, the number of layers-on the prediction error.

This project gives an excellent opportunity to deeply understand fundamental aspects of deep learning networks.

- Supervisors

- Anaïs Badoual, anais.badoual@epfl.ch, 31136, BM 4142

- Michael Unser, michael.unser@epfl.ch, 021 693 51 75, BM 4.136

- Shayan Aziznejad