Learning Spline-based activations for very deep learning

Spring 2019

Master Semester Project

Project: 00370

In neural networks, typically, only the weights of the linear layers are learnt, and the non-linear activation functions of the neurons are kept unchanged. However, learning these activation functions has gained popularity with promising results. Generally these activations are assumed to lie in a family of functions which are parameterized by small number of learnable parameters.

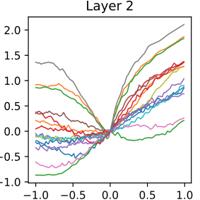

Recently, in [1], it was shown that within a large family of activation functions the optimal ones are composed of multiple ReLUs (Rectified Linear Units) whose weights and locations are unknown apriori. However, learning the location and the coefficients of these ReLUs can be computationally challenging. Therefore, we use a spline-based parameterization to learn these coefficients which decreases the computational complexity.

The goal of this project is to learn the spline-based activation functions in very deep neural networks.

The task will be to build and evaluate the performance of very deep neural networks with learnable spline-based activations on classification problems. The project will be in Pytorch and the learnable activation module are already available.

Pre-requisites: Either already having knowledge of Deep learning, Pytorch, and Signal Processing or going to take courses on these subjects in parallel to the project.

[1] M. Unser. `A representer theorem for deep neural networks', arXiV, 2018.

- Supervisors

- Harshit Gupta, harshit.gupta@epfl.ch, +41 21 69 35142 , BM 4140

- Michael Unser, michael.unser@epfl.ch, 021 693 51 75, BM 4.136