Benchmarking of Proximal Algorithms for Solving Regularized Inverse Problems

Autumn 2020

Master Semester Project

Project: 00389

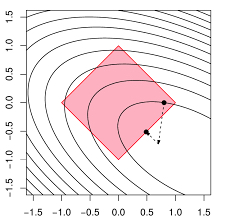

Inverse problems with l1 regularization are popular method for signal reconstruction. This is due to the fact that they promote sparse solutions, i.e., with few nonzero coefficients, and the observation that many real-world signals are sparse in a certain basis. However, due to the non-differentiability of the l1 norm, such problems do not admit a close-form solution; they are thus typically solved using iterative algorithms based on the proximal operator of the l1 norm. In this project, we propose to benchmark two of these proximal algorithms, the standard and very popular alternating direction method of multipliers (ADMM) [1], and a primal-dual splitting algorithm introduced by Condat [2]. The goal will be to compare the performance of these algorithms in various settings. The student should have a strong interest in optimization.

References:

[1] Boyd, Stephen, et al. "Distributed optimization and statistical learning via the alternating direction method of multipliers." Foundations and Trends® in Machine learning 3.1 (2011): 1-122.

[2] Condat, Laurent. "A primaldual splitting method for convex optimization involving Lipschitzian, proximable and linear composite terms." Journal of Optimization Theory and Applications 158.2 (2013): 460-479.

- Supervisors

- Thomas Debarre, thomas.debarre@epfl.ch, BM 4.138

- Michael Unser, michael.unser@epfl.ch, 021 693 51 75, BM 4.136