Learning Robust Neural Networks via Controlling their Lipschitz Regularity

Autumn 2020

Master Semester Project

Project: 00393

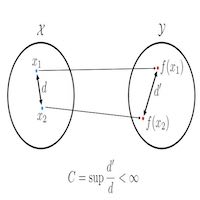

For adversarial robustness, it has been shown that augmenting adversarial perturbations during training, or adversarial training, makes the model more robust. However, it is computationally challenging to employ it on large-scale datasets. Adversarially trained models overfit to the specific attack type used for training, and the performance on unperturbed images drops. In addition, methods which improve robustness to non-adversarial corruptions are relatively less studied. Recently, we developed a framework for learning activations of deep neural networks with the motivation of controlling the global Lipschitz constant of the input-output relation. The goal of this project is to investigate the effect of our framework in the global robustness of the network within various setups. The student should have solid programming skills, in particular being familiar with PyTorch and a general understanding of the main concepts of deep learning.

- Supervisors

- Jaejun Yoo, jaejun.yoo@epfl.ch, BM 4.141

- Michael Unser, michael.unser@epfl.ch, 021 693 51 75, BM 4.136

- Shayan Aziznejad