Asymptotic stability analysis of Echo-State Networks

2021

Master Diploma

Project: 00418

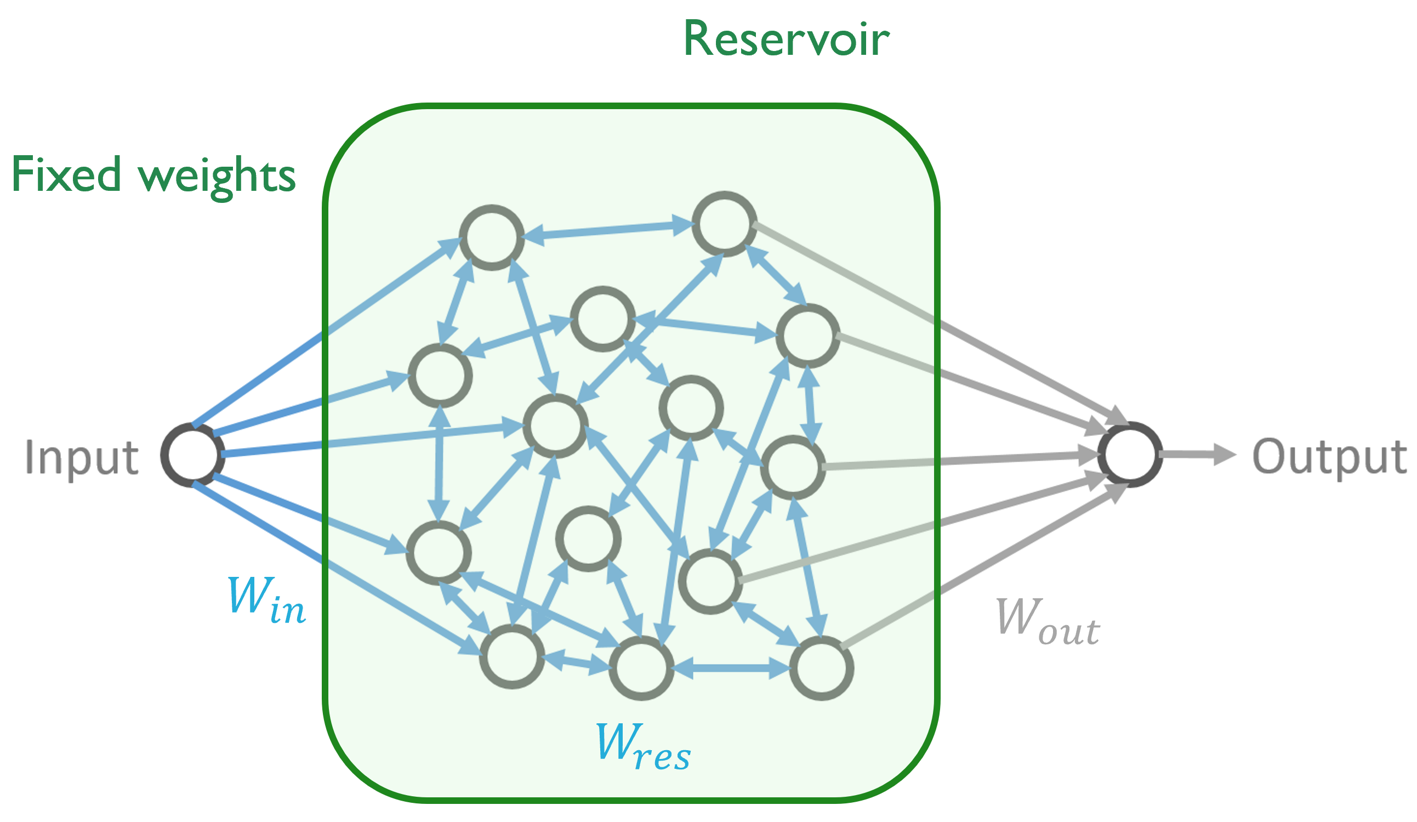

Analyzing the stability of neural networks is a crucial topic as machine learning is applied in many different settings. In this project, we will investigate the stability of random Recurrent Neural Networks. These so-called Echo-State Networks have randomly-fixed internal weights and only the output layer is trained for the task at hand. By tuning the hyperparameters of such networks, they can exhibit a stable or chaotic behaviors, directly impacting the algorithm performance. Recently, an asymptotic limit with infinitely-many neurons has been derived and we will study how this sheds a new light on the stability-to-chaos transition of Echo-State networks. The task to benchmark the different algorithms will be chaotic time series prediction.

- Supervisors

- Jonathan Dong, jonathan.dong@epfl.ch, BM 4.141

- Michael Unser, [michael.unser@epfl.ch](mailto:michael.unser@epfl.ch), 021 693 51 75, BM 4.136