Sparse Unrolled Auto-Encoders for Interpretable Analysis of Neural Data

Spring 2025

Master Diploma

Project: 00461

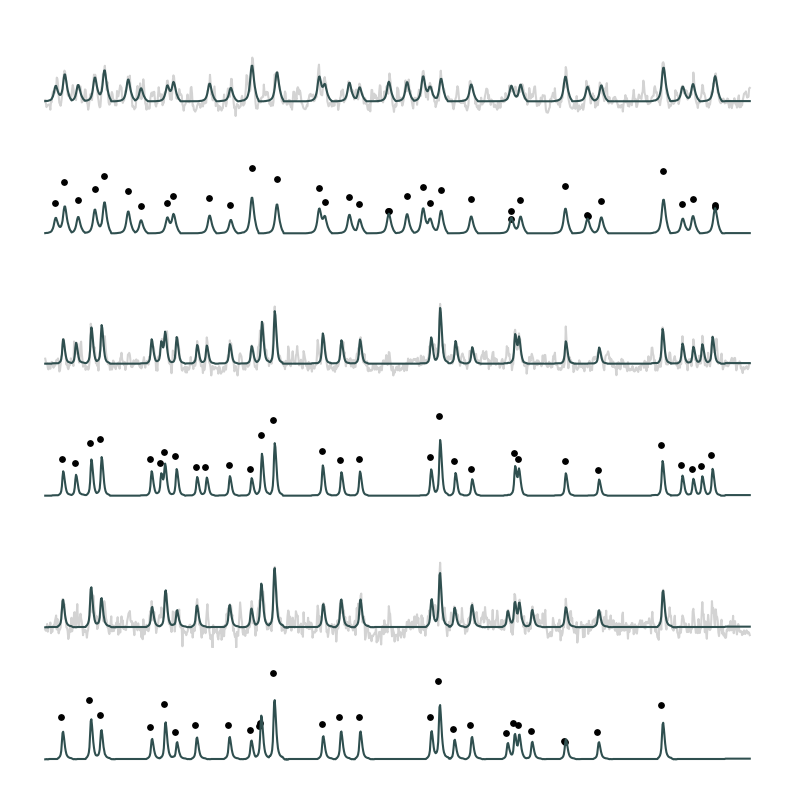

Sparse autoencoders are extensively used in computer science to enhance the interpretability of large language models (LLMs). In this project, we aim to investigate their potential for understanding neural computation. We are using a recently developed interpretable deep learning framework known as DUNL (Deconvolutional Unrolled Learning), described by Tolooshams, B. et al. in their 2024 publication, "Interpretable Deep Learning for Deconvolutional Analysis of Neural Signals". This framework enables the deconvolution of multiplexed neural signals into temporally localized and sparse components, thereby enabling us to break down polysemantic signals into more interpretable, monosemantic responses.

DUNL operates as a convolutional sparse coding tool that unrolls the Iterative Soft-Threshold Algorithm (ISTA) to address the LASSO problem. Although effective in practical settings, it demands considerable tuning, and its complex architecture can be challenging to explain to the neuroscientific community. To overcome these challenges, we are exploring the unrolling of a greedy sparse approximation algorithm called Matching Pursuit (Mallat S.G. et al., 1993, "Matching Pursuits with Time-Frequency Dictionaries"). This method is expected to be easier to tune and more straightforward to explain, offering a theoretically simpler approach.

- Supervisors

- Bahareh Tolooshams

- Sara Matias

- Stanislas Ducotterd